What Are AI Agents?

Artificial intelligence (AI) agents are systems that can act autonomously on behalf of a user or another system to complete tasks.

Unlike other AI models that only generate or predict text, agents combine reasoning, memory, and the capacity for real-world action. These capabilities allow agents to interact dynamically with external systems, maintain context across sessions, and solve complex problems with minimal human intervention.

Architecture

Agent architectures define how intelligence, memory, and action are organized into a functioning system. At the highest level, they specify where reasoning occurs, how agents coordinate, and how external systems integrate.

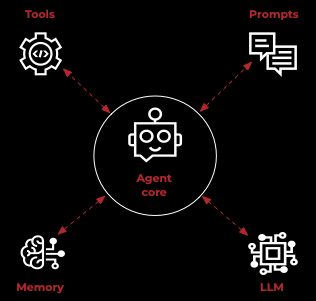

Core components

Agents are built from several core components. Each component plays a distinct role in turning user goals into real-world solutions. Together, they provide the reasoning, context, and execution layers that make agents more than just large language models (LLMs).

Large language models

An LLM is the reasoning engine of an agent. It performs the high-level cognitive tasks that define an agent’s intelligence, including natural language processing, reasoning, planning, and decision making.

The LLM interprets user goals and determines when and how to call on other components, such as tools or memory, to complete a task.

Tools

Instead of relying solely on static training data, agents use tools to interact dynamically with the world. Tools are external functions, services, or APIs that an agent invokes to perform specific actions. This can include retrieving up-to-date information from the web, querying a database, sending an email, executing code, or interacting with a third-party application.

An LLM decides when and how to invoke a tool by generating a structured command. The agent’s runtime executes this command, bridging the gap between language prediction and real-world action.

Agents integrate with tools in the following ways:

-

Direct-to-interface: The agent interacts directly with a service through a well-defined interface, such as an API endpoint or an SDK library.

-

Abstract intention: The agent expresses an intention, and a mediation layer such as Model Context Protocol (MCP) translates this intention into an API call.

Learn more about tool calling in Tools.

Memory

Memory provides agents with the ability to store and retrieve information from previous interactions, enabling them to maintain context and handle stateful, multistep tasks. Without memory, each agent interaction would be independent and isolated, limiting the agent’s ability to perform complex work.

-

Short-term memory: Keeps track of the current conversation or task, ensuring continuity. For example, remembering what the user asked three steps earlier in a workflow.

-

Long-term memory: Stores knowledge over time, such as user preferences, historical actions, or facts relevant to recurring tasks. Long-term memory enables agents to improve across sessions and adapt to individual users.

Prompts

Prompts define how an LLM interprets and responds to tasks. They provide instructions, context, and constraints that shape agent behavior. In agentic systems, prompts aren’t limited to user input. They can also include system prompts (defining role, goals, or style) and dynamically generated prompts (created as the agent reasons through steps).

Well-designed prompts serve as the operating instructions that guide the agent’s reasoning.

Workflows

The structure of an agentic workflow depends on the number of agents involved, how responsibilities are delegated, and how coordination is managed. Two common patterns are single-agent workflows and multi-agent workflows.

Single-agent workflow

In a single-agent workflow, one LLM manages reasoning, tool use, and memory. This model is simple to deploy and delivers quick results because there’s no orchestration overhead. It works best for narrow, task-driven scenarios such as scheduling meetings, resolving straightforward support tickets, or generating reports. Although efficient, a single agent could struggle with problems that span multiple domains or require deeper specialization.

Multi-agent workflow

Multi-agent workflows distribute tasks across specialized agents that collaborate to reach an outcome. A coordinator or manager agent often handles communication and delegation. This model mirrors human teamwork and excels in complex, multi-domain scenarios.

For example, one agent might gather research, another summarize findings, and a third generate tailored recommendations. In business contexts, agents might divide responsibilities across data extraction, compliance checks, and payment processing.

Agent lifecycle

Agents operate in a reasoning-action loop that enables them to interpret user input, determine a course of action, and dynamically adapt based on results.

-

Input: The process begins with a user instruction, system event, or scheduled task.

-

Interpretation: The LLM processes the input, consulting short-term memory to maintain continuity with previous interactions.

-

Reasoning: The agent evaluates next steps, determining whether the task requires external tools, additional context, or further reasoning.

-

Action: If the agent requires external data or capabilities, it invokes a tool to perform a specific action, such as querying long-term memory or triggering an integration.

-

Observation: The agent processes the retrieved context and incorporates it into its reasoning.

-

Feedback: The agent evaluates whether the outcome meets the intended objective. If not, it refines its reasoning or actions in the next cycle.

-

Response: The LLM generates a final response or executes the requested action.

-

Memory update: The agent stores relevant details so it can improve future interactions.

This loop is iterative. Agents repeat reasoning and action cycles until a satisfactory result is produced. By chaining together reasoning, action, and feedback, agents become continuously learning, adaptive assistants.

Use cases

Agents are automating work and augmenting human decision making across industries. Examples of such applications:

| Use case | What the agent does | Example |

|---|---|---|

Digital shopping assistant |

Guides customers through a retail site by answering questions, recommending products, assisting with checkout, and tracking orders. Provides a conversational interface that personalizes the shopping experience. |

A retail agent helps a customer find running shoes in their size, compares styles based on budget and activity, adds the chosen pair to the cart, applies a promo code, and provides delivery tracking updates. |

Customer support |

Resolves common inquiries (password resets, billing, order tracking), maintains continuity across channels, and escalates complex cases with summaries. |

An airline agent handles rebooking and baggage claims, while routing loyalty point or status discrepancies to live agents. |

Personal productivity |

Manages scheduling, email triage, and travel bookings across apps. Acts directly on behalf of the user instead of just sending reminders. |

A personal agent summarizes daily email threads, drafts responses, and generates weekly travel itineraries. |

Business automation |

Orchestrates multi-step workflows like invoice validation, compliance checks, and HR onboarding. Adapts to system changes or exceptions. |

A bank agent automates compliance tasks, such as collecting documents, verifying identities, and escalating suspicious cases. |